Copyright Notice: This article is Copyright AI Factory Ltd. Ideas and code belonging to AI Factory may only be used with the direct written permission of AI Factory Ltd.

It is easy to gravitate to allow development strategy to be overly dictated by the tools immediately at your disposal.

The premise of this article is to consider the benefits of stepping back to review and pre-invest into expanding or creating toolsets and environments to expand one's capacity to do the job that really needs to be done, rather than just the one that can be more easily reached.

This article is the natural successor to the previous article Adding New Muscle to Our AI Testing, which details the impact of bespoke generic tools on the progress of our Spades app.

This topic may sound obvious and even perhaps unnecessary, but I think it identifies an issue that is too easily neglected. It is too easy and common to find yourself late in a project having to retrospectively add-in capabilities that should have been there at the start, and would have speeded things up. The fast track of pursuing the apparently easier path can give the illusion of net faster progress, but this may well be somewhat sub-optimal.

Within this context, the type of work I am considering is some AI game development, working on a program that has already progressed from a prototype into a working program, with an object of developing it into a stronger program.

The Path of Least Resistance

Ok, you have jumped right in and maybe re-cycled some code to create an AI that plays legally and reasonably. Your program works and you want to make it better, so you are probably looking to make some incremental changes or slot some new evaluation or feature in with the idea of testing this. It is easy to work on stuff that is immediately within your gaze.

However you could step back and instead try and analyse the big picture, looking ahead, to imagine what you should ideally have at your disposal. The following section uses a simple graphical metaphor to represent this.

Looking at the Domain being Worked on

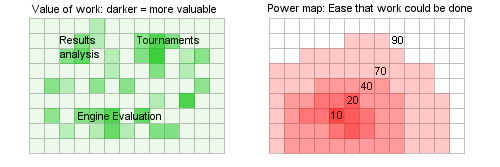

The diagram below represents the idea of imagining the range of targets that might be desirable to achieve the good end result for a particular project. This is obviously rather simplistic, but on the right the red table arranges the range of possible tasks so they are ordered by difficulty. Dark red is easy and white very hard. The further you are from the dark red, the higher the work cost.

The other green table on the left is a map of possible tools, re-arranged to align with the red map, where the darker the green area the more useful the component. In practice this map may have even hundreds of labelled features, but here we have simplified to just 3.

The implication here is obvious. Following the red map on the right encourages you to prioritise work to minimise effort. In this case, although "Tournament" is perhaps highly valuable, it is easy to prejudicially reject it in favour of other goals that are closer to the dark red "low effort" centre.

So this represents a myopic view of work where the goals are easily skewed to favour low effort over value. Any such myopic rejection stops you discovering ideas beyond that. This may sound too simplistic and pessimistic but I suspect that having work overly driven by "effort required" is all too easy and, I suspect, very common. It can give the illusion of fast progress.

Forcing a Wider Longer Term view of the Work

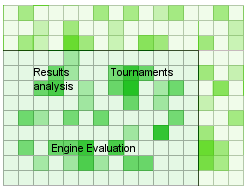

This is instinctively not that attractive because maybe you cannot see what you need. However this can be an invaluable thought experiment to sit down and project a possible future and imagine what "fantasy" facilities might best serve what you want to do. It is best at this point to completely ignore the cost and free your mind, so that you can imagine some facility and, assuming you then have that, imagine what further facility might build on that. Going through this "no limits" thought experiment allows your mind to discover what you would ideally have in an unlimited world, not constrained by intermediate rejection of components. If you assume these tools then the context horizon moves and then new tool ideas might emerge that re-shape your idea of the whole plan. These possibilities were beyond your previous horizon, so never got imagined. (Re: below where the map of options further expands once you start thinking from a larger base.)

Having expanded out this "no limits" model of the work, you can then see that some expensive components then allow other less expensive, but valuable components to be added. You have extended your horizon beyond the limits of the diagram above. You can then also see how this "luxurious" model might be factored down and simplified to achieve the same net functionality but at a reduced cost. A little more restructuring and you find that maybe you have a complete system that has re-shaped your view of what you need.

This "no limits" approach is worth committing a couple of days to explore, if you can avoid being distracted by immediate demands.

Ok, this sounds a little nebulous with no immediate offered qualification, so I am going to map out the kind of projection we achieved and detail what we did and what impact this had on our work.

I should qualify that our path to success was really not optimum throughout and indeed we suffered from some myopic failure to provide some tools at earlier stages.

The AI Factory Evolution of Toolsets

This is entirely about in-house bespoke tools but that is not the complete picture of what is possible. There are off-the-shelf tools and SDKs for editing and debugging that can massively impact your success. For example, after having worked with so many editing systems I "discovered" folding (see A Folding Revolution). This massively improved my own personal productivity.

The AI factory experience is however a good test case as the complexities of just controlling development and game performance testing actually proves to be a domain that needs careful design and application. The difference between good tools in this context can be the difference between success and complete failure.

Let's looks at some of our bespoke tools.

(1) The AI Factory Testbed Framework

This was a vital step we committed to very early on, and was driven by seeing gaming companies inheriting game engines with completely different architectures and trying to wrap these in flimsy bespoke interfaces so that they could be used in products. This is a support nightmare, easily consuming vast amounts of time and making switching projects a new learning curve each time.

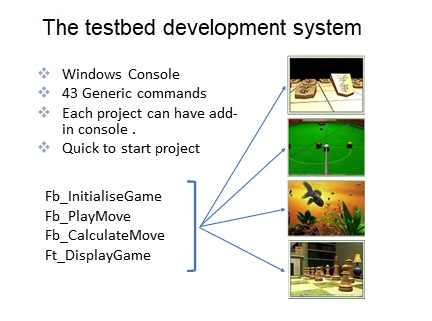

Our solution was to have a generic framework that could be shared by all projects, whether an aquarium, chess or snooker. They all shared an identical interface. They also shared a common architecture that stored the complete game history in one core record and depended on "rewind to start" and then "forward" to execute a takeback command.

Having achieved that sub-goal this then offered a simplified easier linked option, to provide a game development testbed that could directly talk to this common interface, providing sets of generic console commands available to all our engines.

This made the start-up of any game project much easier as you only needed to define the few 4 generic functions above for any engine in order to allow work on any engine. This was built around C++ so that member functions could be overridden to satisfy a specific game, but using a common name and structure that could be shared by the testbed. The latter was built around windows console.

(2) Generic Debugging

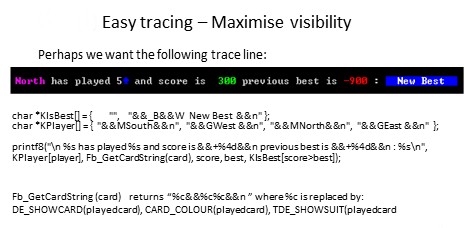

Once you have committed to a common interface and testbed command structure, it invites you to provide common debugging. This can be driven by the testbed console commands but manifest in the sources as replacements for printing that has embedded colour printing capability (see Intelligent Diagnostic Tracing ). Colour text tracing is not to be underestimated as colour can be used to quickly highlight special events.

In the example above we have a macro replacement for printf that has the embedded colour commands &&x and &&_x to control foreground and background colour. Commands such as &&+ further examine the text to follow so that -ve number are shown in red, zero white and +ve in green.

Added to this are generic switch facilities that can be re-defined for each game, so that the console can switch on selected debugging switches that can turn on selected tracing.

All this is controlled by C++ #define macros and conditional compilation, so that the game engine sources could be full of tracing commands that automatically were compiled out when performing a build for the target platform.

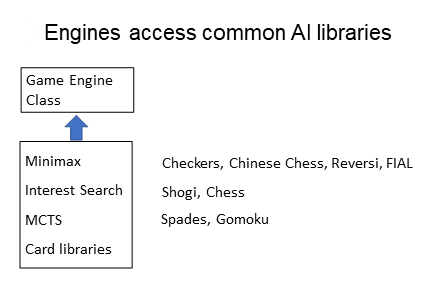

(3) Common AI Libraries

With a generic framework you might as well have generic libraries, such as minimax, monte carlo and card game libraries. This means that you have just one minimax that all engines can share. No need to put a new feature for one game and then migrate that enhancement to another game. The modification will be immediately available for all games.

Review of (1), (2) and (3) above

This early conclusion may seem premature, but the above represents a core architecture to build anything and is intrinsically different to the sections that follow, where we are concerned with improving AI.

In this instance we planned this architecture in advance without needing retrospective re-design. The impact was huge. We had created a system that made support so much easier as all engines shared the same testbed environment. The powerful generic tracing made it much easier to investigate the behaviour of a particular game, so that you could trace out its thinking and in doing so reveal a flaw that otherwise would have been hard to find. The architecture invited the programmer to dig into the game application in a way that would have been harder with no generic support.

Creating a new engine was also simplified as you started a project with ready-made tools to allow you to build an app very quickly. Our record for creating a game from scratch to fully working playable prototype was just one day.

(4) Tournament Testing

Why is this special? The motivation for having a tournament tester is explained in Developing Competition-Level Games Intelligence. The issue is that you need to avoid the trap of developing sequences of versions, assessed by Vers1 < Vers2 < Vers3, as this has more minefields than you can imagine. A healthy baseline is to have a system for automated round robin tournaments that plays a mix of versions against each other. These teams can include oddball players that are too aggressive or timid. If your new version wins the tournament then your confidence is much stronger than any solo match.

The tournament tester is generic and has proved absolutely vital in our game testing.

(5) The Rating System

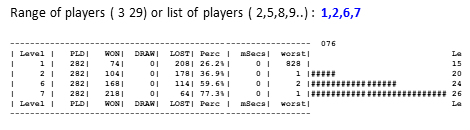

The need for this was harder to anticipate, but it seems that if you want to make many tests then simple round-robin above can be too expensive. This is discussed in The Rating Sidekick and A Rating System for Developers. This allows the developer to squeeze much more information from a series of head-to-head matches about the significance of that result. This is mapped into an ELO-like rating, but much more sensitive than ELO as each new result is compared to all previous results and as a consequence the rating and rating order of all other versions may change.

The impact of this was to accelerate testing and deliver more sensitive and rapid results. This was not here at the start, so was a later entry into our toolset.

(6) The Switch Processing System

This is explained in detail in the recent article Adding New Muscle to Our AI Testing. This solves the issue about how to progress with testing multiple features using the Rating system. The issue is that although Rating offers sensitive test results, it does not analyse the performance of features that make up each test version.

This added system allows the user to monitor the progress of each sub switch tested and effectively tells you what to test next. This massively reduced the time taken to design follow-on tests, which anyway could prove to be inefficient tests. The correct test that should have been run might never get executed using manual control.

This successfully rescued testing development that had otherwise ground to a halt. This again was not here at the start, but added in the last year, although it is based on a system devised but neglected from some 20 years ago, for Shogi.

Final Conclusion

I considered calling this article "It's the Tools, Stupid!", to mimic a famous political slogan, but I backed off. However I think there is a real point here.

For us, the above details some major tool components that contributed to our engine development. Without these perhaps our company might not even still be around. It has been a long object lesson for us in how to develop AI and has massively accelerated our progress and reduced the required manual effort. We have watched other teams that have failed to control AI improvement well and have failed.

Our advice would be to take a broader look at your development and to invest into generic tools that add power to your development elbow and allow you to easily routinely run tests that otherwise might be laborious to mimic by other means. Make it easy to do the right thing.

We may still be missing some super tool we have yet to invent, but we are certainly very much aware that we owe our success significantly due to the tools we have already created.

Jeff Rollason - January 2018