Copyright Notice: This article is Copyright AI Factory Ltd. Ideas and code belonging to AI Factory may only be used with the direct written permission of AI Factory Ltd.

The wide easy accessibility of Chess programs, particularly through smart phones, probably means that more often people are playing against programs than against other humans. This offers both great training and learning opportunities as well as extending recreation.

However an issue with such machine opponents is providing opponents that are satisfying to play against. Programs are now so strong that the issue is not making them any stronger, so much as providing weaker human players with satisfying games. Chess programs are not easily coaxed into making the kind of errors that weaker humans might make. This is a headache for programmers. We have tackled the issue head-on.

Our successful Chess program engine "Treebeard" has appeared in various products, including Microsoft MSN Chess, but most frequently manifests in our Android product "Chess Free", where it has peaked at over 1 million games per day. For some 5 years it has been the top ranked chess in the charts and has over double the number of reviews and ratings of any another chess app.

Part of this success could be attributed to its intelligent weakening system, which allows the program to make mistakes like a human player. This provides users with an opponent that they can lay "human" traps against which makes for a much more satisfactory opponent to play.

This article describes how we did that and why it has been possible at all.

Figure 1 Computers are strong, but may not be the most comfortable type

of opponent, particularly for beginners

Background

First we need to give some idea about how our chess is designed. Unlike essentially all other current chess programs it is not a generic variant of the usual fast tree-searching minimax program. It instead uses a very slow analysis, managing just 150,000 nodes per second on an i5, compared to some 1m to 2m nodes for programs like Komodo. This slow node rate is due to its deep analysis that uses an advanced version of SOMA (described here as used in our Shogi program) to allow multiple tactical exchanges to be statically calculated across the board in a single calculation. It understands pins, ties, promotions, mate threats and connected capture threats, which gives the program the ability to examine a node in depth and from that predict the value and outcome of existing exchanges. This depth of analysis also provides a deeper positional analysis of the board, allowing positional plays to be statically predicted.

The very slow node rate requires a radical compensating reduction in tree search size to make it viable. This was made possible by our own particular bespoke "Interest search" mechanism, which is described in detail in the book AI Pro 2 but can be downloaded for free here Interest Search . This only searches tree branches with a high probability of being meaningful; a significant departure from conventional tree search pruning, which historically examines just the moves at the current branch for pruning. Interest search essentially assesses the probability that a move is meaningful by taking the product of the interest value of all moves in the current branch. So a branch that has an inconsequential move followed by another inconsequential move will more likely be cut-off before a 5 play sequence of interesting high probability moves. This very radically reduces the tree search size and actually confines all tree branches to being plausible.

In contrast if you pause a conventional chess program minimax and examine the current branch, you will almost always find that it is looking at a junk variation. I think it is a justifiable claim that conventional minimax applied to chess does not feel like AI, but more akin to theorem proving.

The net effect of this deep analysis and interest search is that the program is much more likely to be looking at variations that a human might consider. The program is then already thinking and choosing moves more like a human player. However, since the search tree is very heavily pruned by interest search then there is more scope for mistakes. This is a trade-off cost as the improved human-like style inherits a much greater chance that it will overlook deeper tactical traps. The end result is that this engine architecture is most certainly not optimum for the highest level of play and indeed only manages master strength on a mobile phone, whereas more conventional programs such as Droidfish and Stockfish manage an outstanding ELO of maybe 3000+, making them super-human in strength.

However maybe 99%+ of human chess players are weaker than Chess master strength, and these are the people that most often access our chess program. These are the people we need to address to provide satisfying games of chess. A program that plays near master strength is not comfortably weak enough for the average human player. The next section looks at this.

Figure 2 Credible Chess weakening is not so easy

Conventional Weakening in Minimax

In conventional minimax chess programs the answer to this is to reduce the search depth. However such programs that reduce the "catch-all" search to "N" plies are still obliged to perform a capture search at the end of N plies to be able to assess the material balance. Removing capture search creates a bizarre play style where, for example, a program will endlessly sacrifice pieces to avoid an inevitable loss of the queen. This is called the "Horizon effect". However with capture search added a program will generally play very well, above middling human play, even if the primary variation depth is just one ply. This is too strong for beginners.

Minimax Hacks to Weaken Play

The first call for weakening is to add random values to the evaluation at the terminal node. This randomisation has to be substantial, otherwise the program will still successfully avoid losing material. It therefore needs to be a random value more than a pawn. This will not stop defence of larger pieces and will also result in bizarre positional errors (such as simply retreating a knight back to its home square). More workable variations of this idea might instead determine that big errors are not made all the time, so the program might play a series of moves with no material loss (low random error). This would allow other types of positional weakening, such as removing the evaluation of doubled pawns.

However these are hacks and the end product is a program that is generally just partly crippled and simply no longer plays like a real weak player. In an ideal world you want the program to make the type of error that humans might make. For a start a program faced with a board full of pieces will equally spot attacks on any pieces, equally well at any depth. Humans are different and will overlook unexpected attacks and the probability of this error increases with depth as the board becomes harder to imagine. This is what you need to emulate.

Treebeard's Approach to Modelling Human Errors

This is where Treebeard's slow node analysis reaps additional benefits. It has the time to do a more sophisticated analysis. The basis of this is to rank the chance that a tactical feature might be overlooked and that that oversight increases with depth. Treebeard models "human" errors by estimating the probability that some tactical threat might be overlooked. Hereafter this article will refer to these as "human modelled errors".

To do this, first Treebeard populates an array iPlayerMiss[] with default error values for each square:

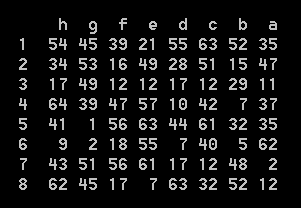

Figure 3 The iPlayerMiss array that pre-assigns errors to squares

The values here are truncated to 6 bits for illustrative purposes, but the values held are 10 bits and the sum of 2 random values to skew the result around the average random value. This value is pre-calculated so that errors are skewed towards particular squares. Doing this just once per search avoids one part of the search noticing something that another part misses. The latter would undermine the human error emulation it is trying to achieve. The higher this value, the higher the chance of overlooking a tactical threat on the square. From above the square h4 has the highest chance of error and g5 the lowest.

A further dynamic array iPlayerFocus[sq] is also maintained during the search to mark squares in the current continuation. Squares within the current continuation are less likely to be overlooked. Squares close to the current move within the continuation are even less likely to be overlooked.

The program then uses these reference arrays to determine errors in two different ways:

| 1. | Plausibility: Within the search to determine whether a move is searched at all. This is basically plausibility analysis and drives the interest search. An error here could cause the program to completely miss some critical branch in the tree, | |

| 2. | Evaluation: To modify the final evaluation, so that the static tactical analysis will make a mistake assessing the tactical outcomes and therefore return an erroneous final returned value. |

Each of these two applies a similar set of tests, so we will look at these in combination. Preceding these series of tests each square starts with a fixed probability value equivalent to "no error". With each test that might cause an error this value is eroded, so that a mistake might be a combination of causes to make the evaluation overlook a threat.

The tests performed on the side to move and opponent side attacked pieces, are as follows:

| 1. | High value targets | |

| Naturally if the piece being compromised is a high value piece, the user attention is higher, so an oversight is less likely. The King and Queen have a particularly strong skew to avoid errors. | ||

| 2. | Distance of attacker to target | |

| If the attack is from a distant square then it is more likely to be overlooked. | ||

| 3. | Attacker is in same File or Rank | |

| Attackers in same file or rank are easier to spot. | ||

| 4. | Multiple attackers | |

| If more than one piece attacks this piece then it is more likely to be noticed. | ||

| 5. | Attacking piece moved in this continuation | |

| If the attacking piece just moved, then it will be noticed. If it moved inside the continuation, but more than one move ago, it may also be noticed, but less strongly. | ||

| 6. | Defender of piece became pinned or tied during the continuation | |

| A piece that is defended does not necessarily need to be looked after, but during the continuation the defender may be pinned or tied to some other piece, so the defence may be compromised. | ||

| 7. | Error increases with depth | |

| The deeper in the tree that a tactical threat occurs, the higher chance it will be overlooked. This is probably the most important term in the error simulation. | ||

| 8. | Multiple minor tweaks, including adjustments for the number of pieces on the board. |

If the error exceeds the threshold from these tests, then no capture evaluation is undertaken on this square. This might make no difference, however in some instances a piece could be profitably captured, but it will be overlooked. For plausibility this will likely result in a failure to generate moves to either move, block or defend a piece under attack. In the evaluation it will simply cause the static evaluator to overlook a capture and therefore return an incorrect capture assessment.

Human Modelled Errors applied to an Example

The following is a simple classic trap "Fajarowicz Trap" from the Budapest Gambit. After 1. d4 Nf6 2. C4 c5 3. Dxe5 Ne4 4. Nf3 d6 5. Exd6 Bxd6 white has just played the blunder 6. g3?? (below)

Figure 4 The Fajarowicz trap after the blunder 6. .. g3??

A number of other similar moves would also be a blunder. The move Qd4 was preferable and safe. Here we now have the surprising double sacrifice by black 6. .. Ne4xPf2 7. KxNf2 8. Bd6xPg3+ and now the white queen is undefended, but white is left in check so the queen cannot be protected.

Testing this: Playing at a very low level without human modelled errors and the program immediately spots the double sacrifice of knight and bishop. However with human modelled errors turned on the program misses this simple tactical trick. However the search was the same depth with the same number of nodes visited. The error was caused by the search failing to spot that after Bd6xPg3 that the white queen is under attack. Such an oversight in perceiving an attack would be unlikely for a human player if the capture were immediately present. However if that threat only manifested deeper into the search then humans (unlike programs) become myopic and might easily overlook the threat.

By modelling this with simple criteria based on human-likely oversights we can plausibly model human frailty.

What if we instead applied a conventional Minimax Error Hack?

If we swapped out human modelled errors and instead simply randomised the evaluation, then to replicate this error would need a random addition of up to 3/4 of a pawn. 1/2 a pawn would not be enough. If that was the price for creating that level of mistake then the positional play would fall apart, completely swamped by the high random value added to the evaluation. It would make the human tactical mistake but the rest of the play would be horrible. Of course the application of such brute force randomisation is open to some improved tailoring, but the principle still holds.

What is the impact on strength of play?

This is tuneable to allow varying degrees of weakening, but head-to-head tests show that for a range of weakening levels for the same depth and node count that the program wins between 15% and 40% of games. Note that this can be overlaid by simply also reducing search depth and removal of certain positional understanding (not compromised by this modelling). A combination of a 15% win rate with added weakening via reduced search depth easily allows weakened levels to drop to 0% win rate against a stronger opponent.

In practice we have to admit that our users also still want the very lowest levels of play for complete beginners, so the very lowest levels eventually switch to substantially enhancing random play, just to provide an opponent that a complete beginner might beat.

Conclusion

The model above is highly plausible, but note that the core objective here is to actually just create an AI that people want to play. The above seems to offer a credible reason to believe that this is being satisfied, but we have no formal verifiable means of proving the quality of its human-friendly style of play. What we do have is a program that appears to be very popular and has a user rating at the top of the Google Play app store, from about some 600+ chess programs. Examining the reviews, people seem to love to play our chess, offering much adulation about how nice it is to play. We have good reason to believe we have succeeded.

All this bespoke prescriptive approach is at odds with the new shift in AI towards ideas such as Deep learning, which have no directly applied human knowledge. At first glance the latter does not obviously appear to be able to address such concerns as intelligent weakening. But maybe such a system could be trained against weak opponents to provide an additional neural net suitable for playing weaker humans. However this may simply not work well with self-play deep learning.

For now our bespoke prescriptive solution to emulating human errors does not have an obvious immediate rival, and our users appear to be very happy.

Jeff Rollason - September 2019