Copyright Notice: This article is Copyright AI Factory Ltd. Ideas and code belonging to AI Factory may only be used with the direct written permission of AI Factory Ltd.

These are more pondered thoughts on the process of controlling the development of AI for turn-taking games, a topic already discussed in various articles in earlier issues of this periodical. However the topic offers endless depth so again we step back and reflect on the issues of finding the best practical engineering solutions.

In this article we look at how AI development work can easily segment into just two over-used practices.

I have been developing game AI since 1977, with more AI programs than I can remember. In this time my methods have substantially evolved but are not unassailable. It is still easy to take wrong turns or allow myself to be kidded that I have found something that is not actually there. Therefore no holy grails, but I have had plenty of experience to learn from.

The foundation question in this article about AI development is:

"Why is it hard to get it right?"

First I'll look at the nature of AI development:

The beast we hope to understand

Human beings have little real conception how it is, with its astonishingly slow neural architecture, that the human brain can achieve such intellectual heights as it does.

If we instead try to understand a computer that serially processes problems, unlike the massive parallel architecture of the human brain, comprehension seems a much more viable goal. It therefore looks achievable, particularly if we completely designed its analytical process in the first place. Job done? Unfortunately not.

The issue is that the gigantic gulf between the speed of the computer's analysis, and our capacity to step-by-step follow the analysis, is so radically different: a difference of several orders of magnitude. Even if some fancy Berocca-like supplement allowed us to think at supreme speeds, we would still fail because the gigantic length of the path in terms of steps between the start and end of analysis does not fit the way we think, so we could not hold this path in our heads in a way that would make it understandable.

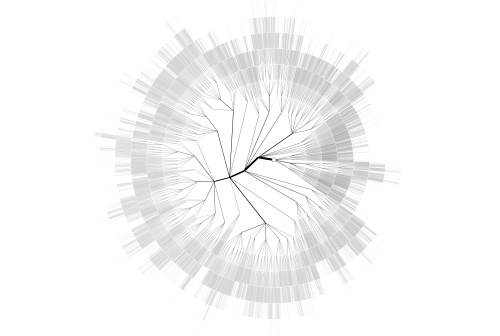

The point here is that program's thinking is therefore largely, by default, an opaque black box. We are confined to understand by having the program regurgitate its analysis in a form that we can comprehend. This can be any number of things but often visual representations offer a helpful handle to what is going on. For example the diagram below shows an MCTS search in the game of Connect 4. The radial layout allows increasingly large areas for each additional depth and this gives the developer a sense of how the program is spreading its analysis time. This kind of representation can be invaluable to spot structural changes in search that would be hard to otherwise detect.

Methods available

I'm going to start here from the premise that we are looking to continue to develop an AI that already exists and that we are simply trying to improve it. I'm not considering how we start. In this I'm going to make a simplification and identify two core common key development practices, as follows:

(1) Testing testing testing...Did it win?

The premise here is that we tweak and test the program to see if it "improves"

the performance. As discussed at length in Evaluation

options - Overview of methods and Lessons

from the Road - Championship level AI development this is a very "difficult"

area with many ways to get it completely wrong. Passing over these difficulties,

automated testing is an unavoidable step. You cannot really be sure that the

program is "better" unless you can show that it actually delivers

a better win rate (or whatever the measure of success might be). However we

are at arm's length from the AI as soon as we are churning out bulk wins and

losses.

(2) Become an Ant and work on some detail in the analysis

I say "Ant" here as it is often only possible to address confined

areas of the AI's complete capability at any one time in trying to improve

it. This does not include "tweaks" where the user increases some

heuristic value from 1.1 to 1.15 to see what happens. The latter belongs to

"1" above. We are instead looking at specific instances to be analysed

in depth and the coding to explicitly remedy or enhance the program. In that

respect we are often functioning like ants touching up the painting of the

Mona Lisa. We do not easily see the big picture but trust that the one small

spot we are currently working on is being re-touched in a suitable manner

to achieve some good overall result.

The critical attribute here is the radical difference in level of assessment. "1" is a statistical number assessment of maybe many hundreds of games in response to tweaks and "2" is often deeply concentrated on just one position in just one game, perhaps backed up with testing multiple cases from different games. The gulf between 1 and 2 can be huge.

The above omits any number of possible bridging analytical work with bespoke abstractions that reduce the game analysis into some form where we get a meaningful overview of what is happening, such that we can comprehend and work on a solution. This might be some visual key (as shown earlier) or a statistical breakdown. Our programs have many of these and they are absolutely critically important.

What actually happens in practice

However day-in day-out my thesis is that AI development is usually not like this. Despite the attraction of the bridging methods above, I believe that in practice much of the time of AI developer's work actually easily falls into just "1" and "2" above. Since these two methods do not obviously mesh into one single obvious framework to follow, as they are very different, this is hard to get right.

This is often driven by hope and a desire to have an easy life. "1" above is seductive as we can do a tiny piece of work or even a moderate algorithmic piece of work (which may lack rigor) drop into the testing framework and walk away while all our machines grind out the numbers. The numbers pop-up and we start drawing conclusions and maybe even setting ourselves on a path to delusion and purgatory as we often really only know that the numbers went up rather than down. As detailed in Evaluation options - Overview of methods and also Statistical Minefields with Version Testing and Digging further into the Statistical Abyss, there are endless opportunities to delude ourselves.

We then dip into "2", which is often satisfying as we are "in close" with the AI so can feel convinced we are at one with the AI process. We will still end up jumping to "1" when we want to assess this. But this then again removes us from seeing what is actually happening in broader terms.

The issue is the gulf between these processes, where we move from being "up close", to a "highly remote" perspective, with no automatically offered bridge between these two states.

Making this work

I'd love to roll off magic bullets here but actually I'm just going to offer some remedial strategies:

(a) One solution is to write that bridging analysis that gives us viewports

into how the AI is doing, and this is highly desirable. This may identify

skews and behaviours that are otherwise invisible. To do this though requires

more time diverted to making the right analyses, which basically depends on

intuition about what you actually need. Having committed to particular analytical

tools you may become overly dependent on these and in consequence be distracted

from seeing things around you that the analysis cannot show.

Advice? Just get good at doing this! Ideally design your methods to be amenable

to be recycled and re-combined. Be prepared to make the effort.

(b) Just watch the game play and stop it to save issues that you may spot.

This may seem obvious but is "safe" in that it invites you to view

all parts of the game, so you get something like a "big picture".

It is still limited in that it might take many games before some horrible

consequence of recent work manifests and you might not get there.

Advice? If convenient, watch games against completely different AI programs,

to avoid incestuous collaboration of the AI to skirt around newly created

weaknesses.

(c) Get input from human beta testers. What we have done is to allow beta

testers to play the game in the target app and click to send the game situation

in an e-mail, with comments. This is very good as other people will find holes

you can never find.

Advice? Make sure the beta tester can send explicit examples. Beta input with

generalisations about all play is often not helpful enough as you find you

need them to qualify their explanation, but then they often do not have instances

they can easily share.

(d) Write plenty of diagnostic display code in your program to tell you what

it is doing. It may tell you what you are already sure it must be doing, but

at times it will tell you something that will indicate that it has made some

mistake or is doing the wrong thing.

Advice? Make a good generic system to allow you to insert such tracing in

such a way that it is easily automatically disabled in release.

(e) Kick yourself out of testing "1" to force yourself to actually

dip into your program and see what it actually does. "1" is a poisoned

chalice if over-used.

Advice? Break up your time. Force yourself to watch some games. The numbers

are just numbers.

Conclusion

The point of this article was just to make this dichotomy of working practices very clear. It is too easy to kid yourself that you are "on top" of your development process. This article is very limited but at least be aware of the gulf to be bridged. Other articles cited in this series are needed to get the fuller picture.

Jeff Rollason - October 2016